In online retail, success hinges on more than just offering quality products at competitive prices. As eCommerce catalogs expand and consumer expectations soar, businesses face an increasingly complex challenge: How do you effectively organize, categorize, and present your vast product assortments in a way that enhances discoverability and drives sales?

Having complete and correct product catalog data is key. Effective product attribute tagging—a crucial yet frequently undervalued capability—helps in achieving this accuracy and completeness in product catalog data. While traditional methods of tagging product attributes have long struggled with issues of scalability, consistency, accuracy, and speed, a new thinking and fundamentally new ways of addressing these challenges are getting established. These follow from the revolution brought in Large Language Models but they fashion themselves as Small Language Models (SLM) or more precisely as Domain Specific Language Models. These can be potentially considered foundational models as they solve a wide variety of downstream tasks albeit within specific domains. They are a lot more efficient and do a much better job in those tasks compared to an LLM. .

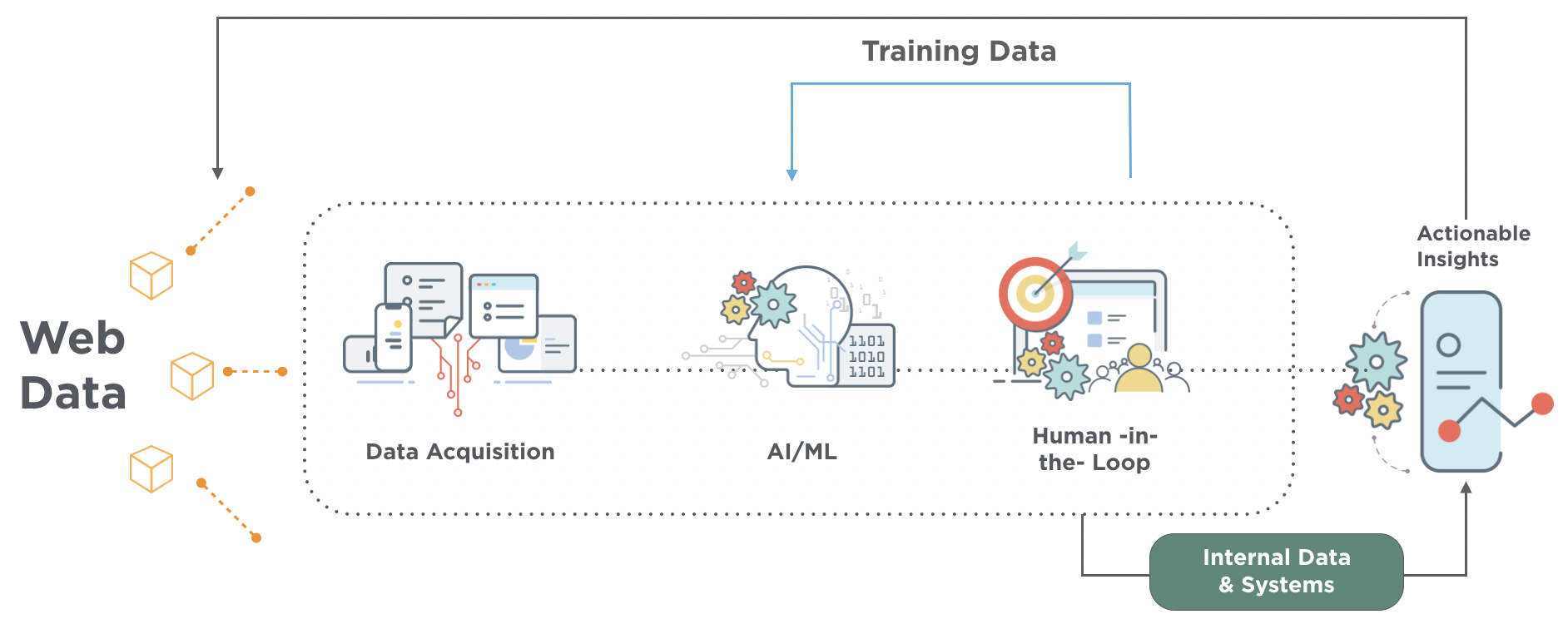

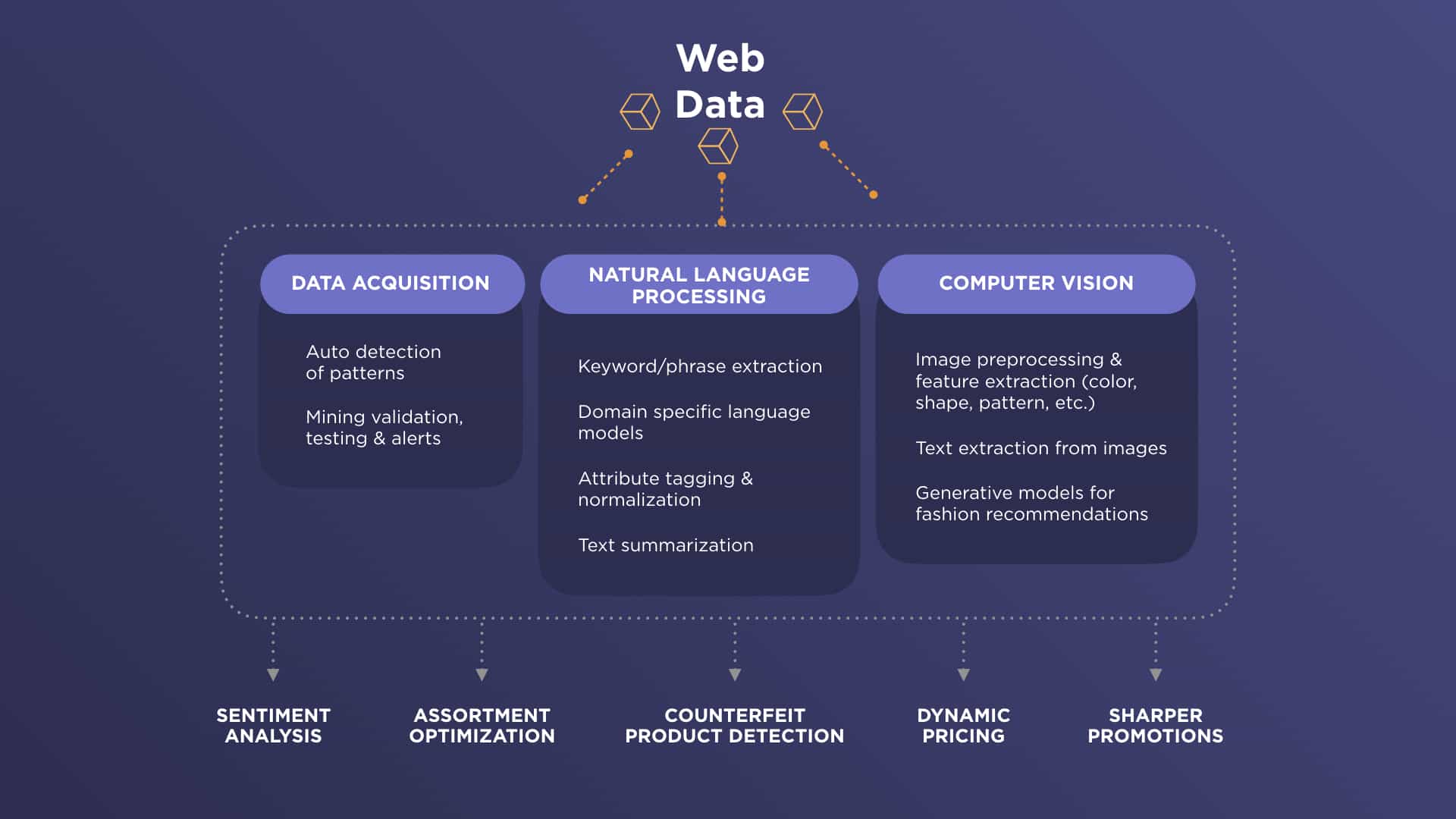

Retail Domain Language Models (RLMs) have the potential to transform the eCommerce customer journey. As always, it’s never a binary choice. In fact, LLMs can be a great starting point since they provide an enhanced semantic understanding of the world at large: they can be used to mine structured information (e.g., product attributes and values) out of unstructured data (e.g., product descriptions), create baseline domain knowledge (e.g, manufacturer-brand mappings), augment information (e.g., image to prompt), and create first cut training datasets.

Powered by cutting-edge Generative AI and RLMs, next-generation attribute tagging solutions are transforming how online retailers manage their product catalog data, optimize their assortment, and deliver superior shopping experiences. As a new paradigm in search emerges – based more on intent and outcome, powered by natural language queries and GenAI based Search Agents – the capability to create complete catalog information and rich semantics becomes increasingly critical.

In this post, we’ll explore the crucial role of attribute tagging in eCommerce, delve into the limitations of conventional tagging methods, and unveil how DataWeave’s innovative AI-driven approach is helping businesses stay ahead in the competitive digital marketplace.

Why Product Attribute Tagging is Important in eCommerce

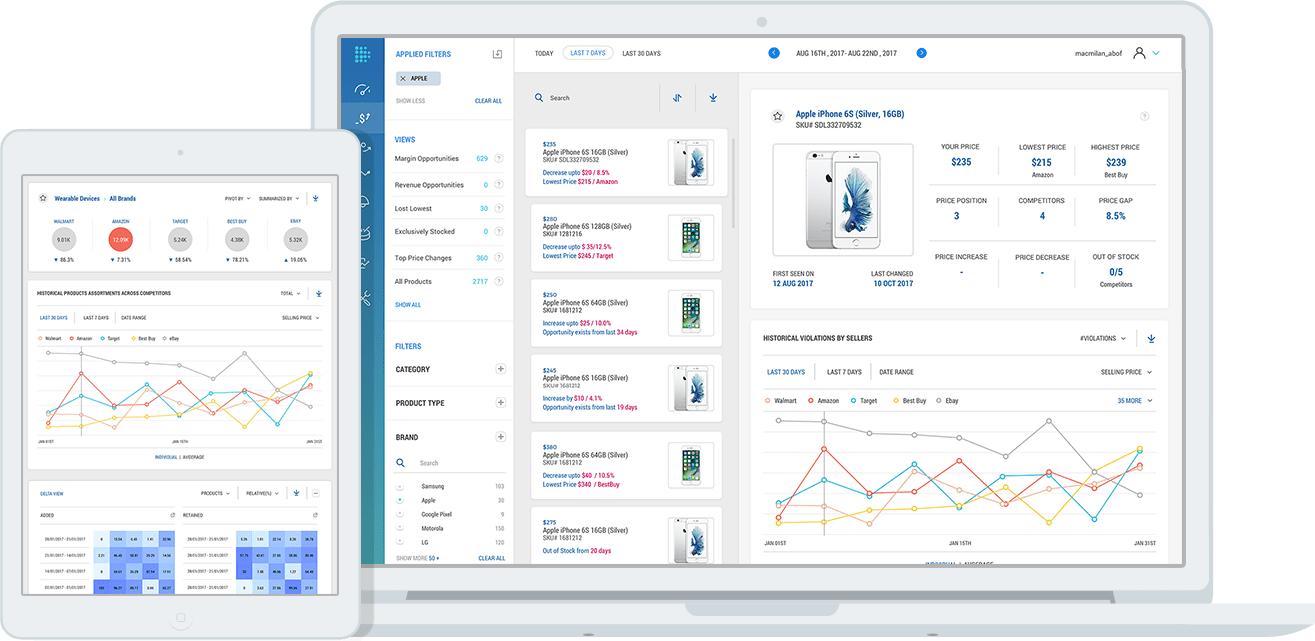

As the eCommerce landscape continues to evolve, the importance of attribute tagging will only grow, making it a pertinent focus for forward-thinking online retailers. By investing in robust attribute tagging systems, businesses can gain a competitive edge through improved product comparisons, more accurate matching, understanding intent, and enhanced customer search experiences.

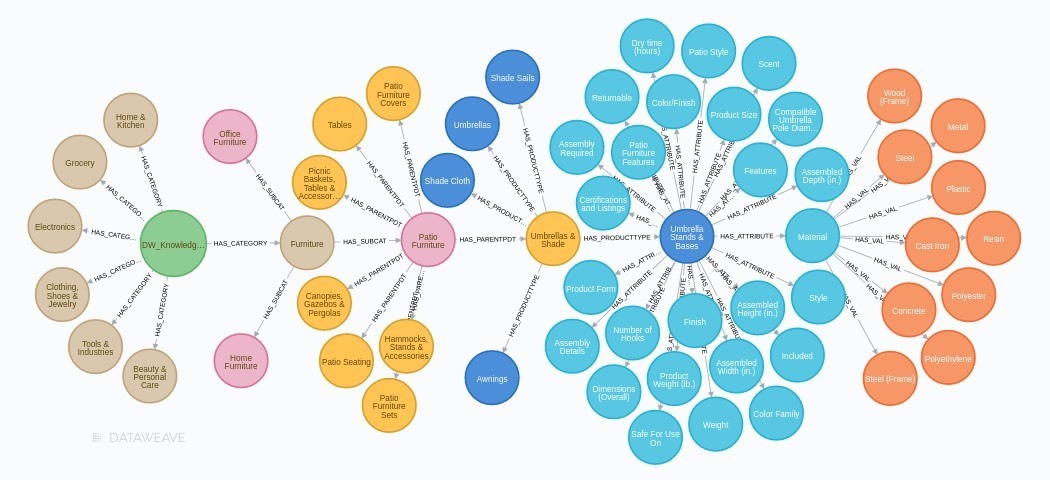

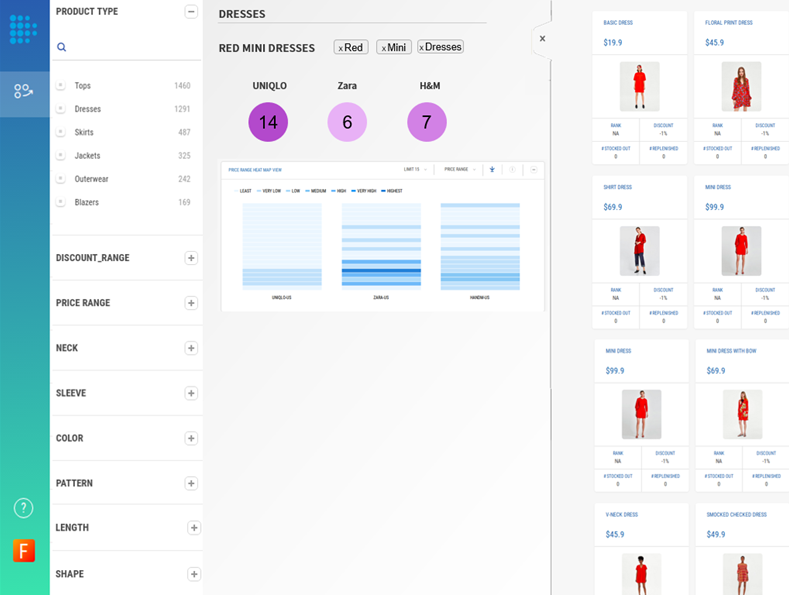

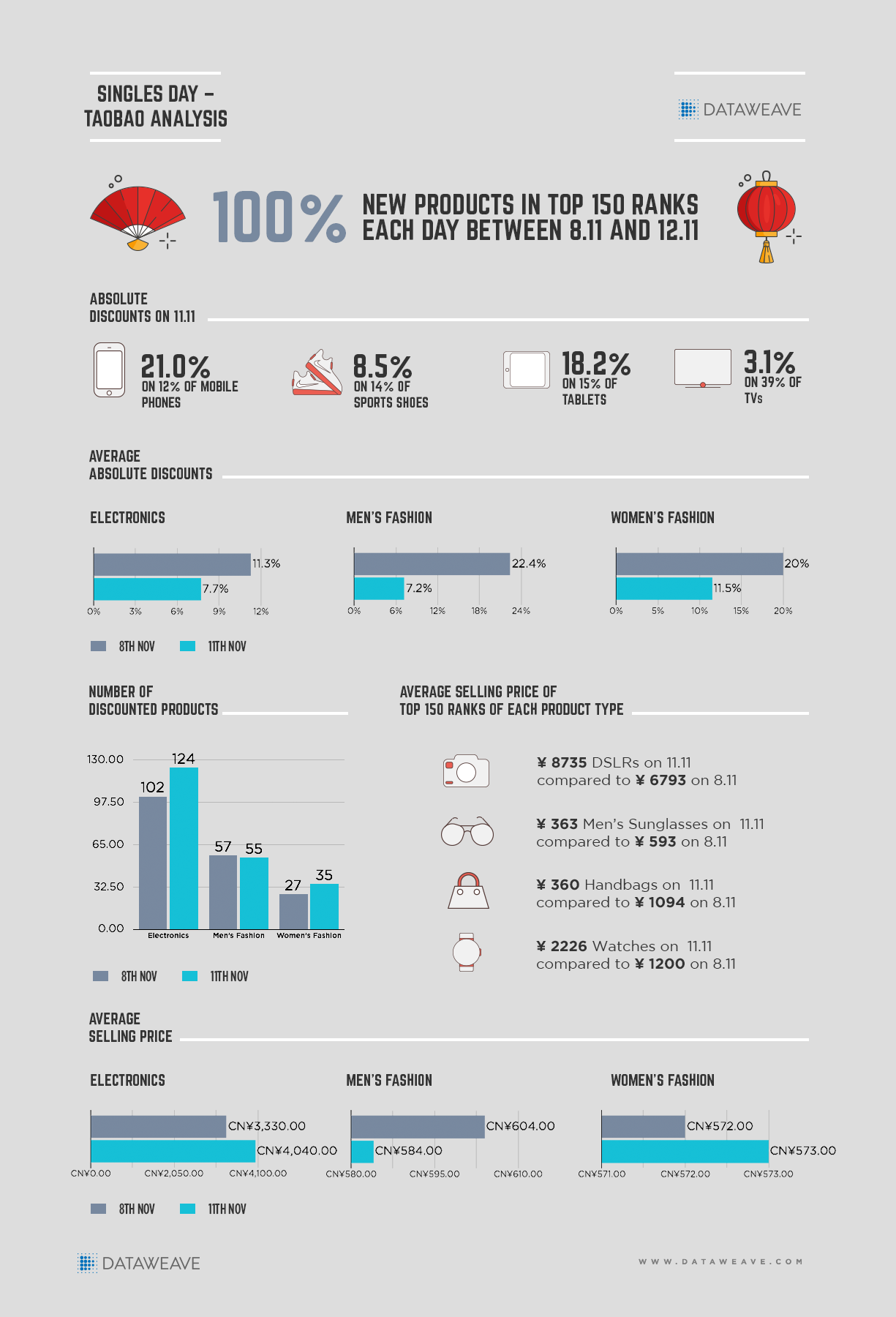

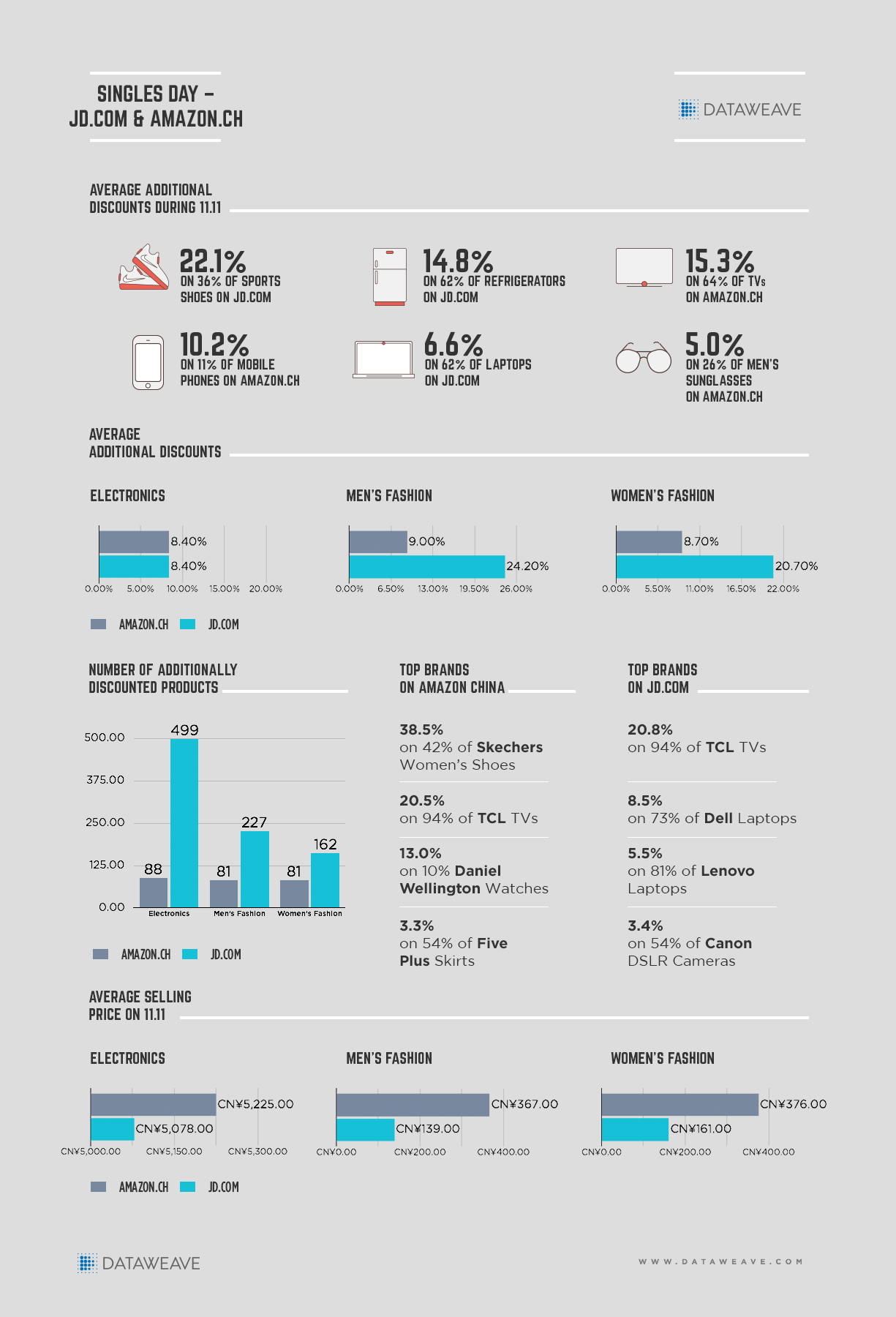

Taxonomy Comparison and Assortment Gap Analysis

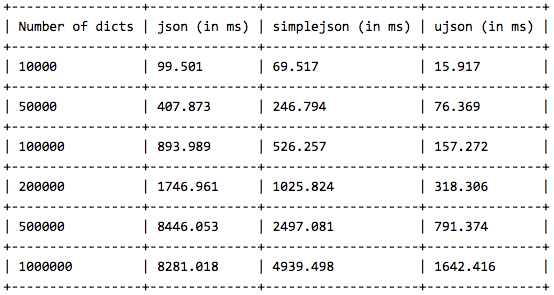

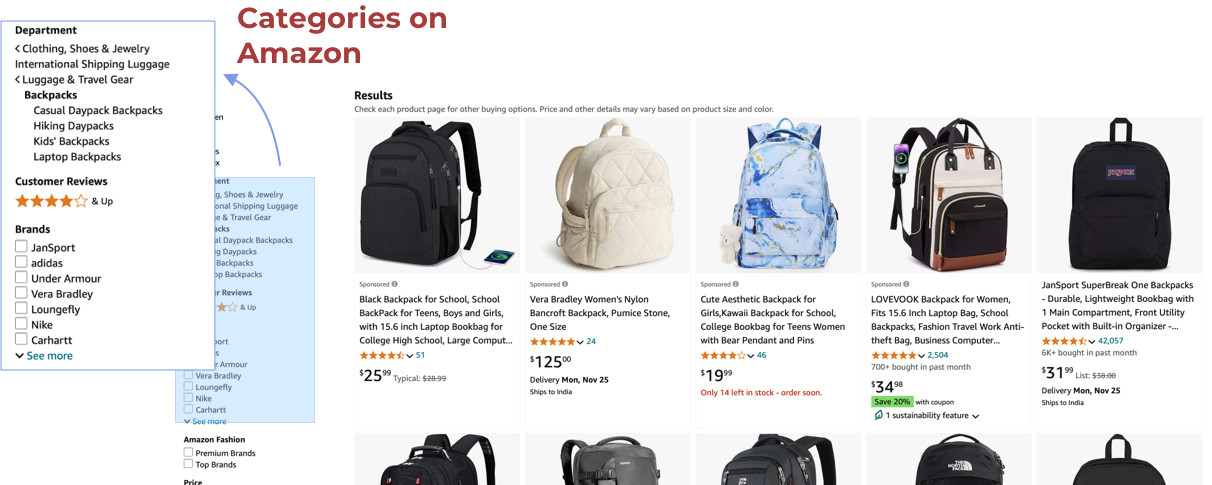

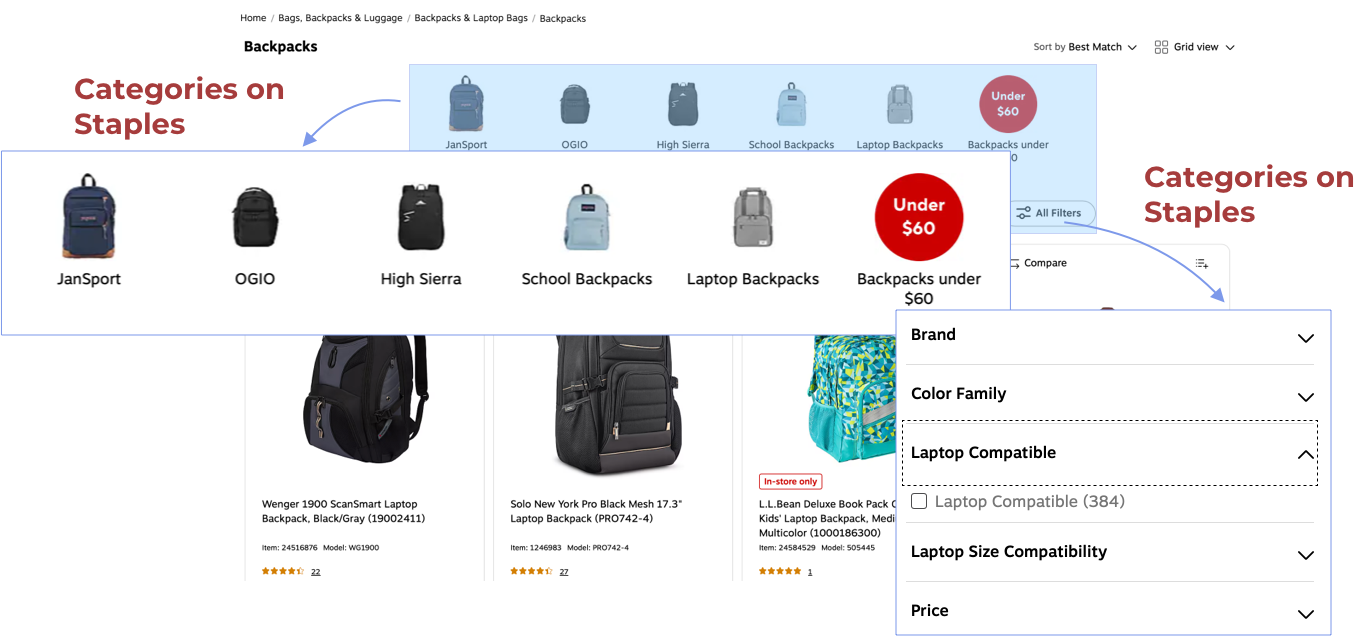

Products are categorized and organized differently on different retail websites. Comparing taxonomies helps in understanding focus categories and potential gaps in assortment breadth in relation to one’s competitors: missing product categories, sizes, variants or brands. It also gives insights into the navigation patterns and information architecture of one’s competitors. This can help in making search and navigation experience more efficient by fine tuning product descriptions to include more attributes and/or adding additional relevant filters to category listing pages.

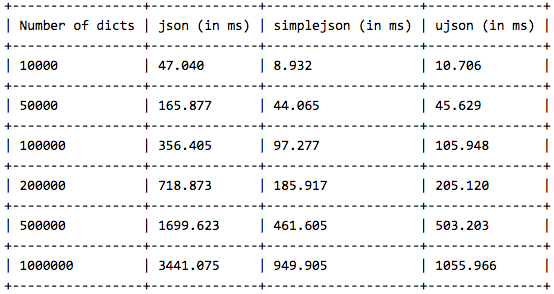

For instance, check out the different Backpack categories on Amazon and Staples in the images below.

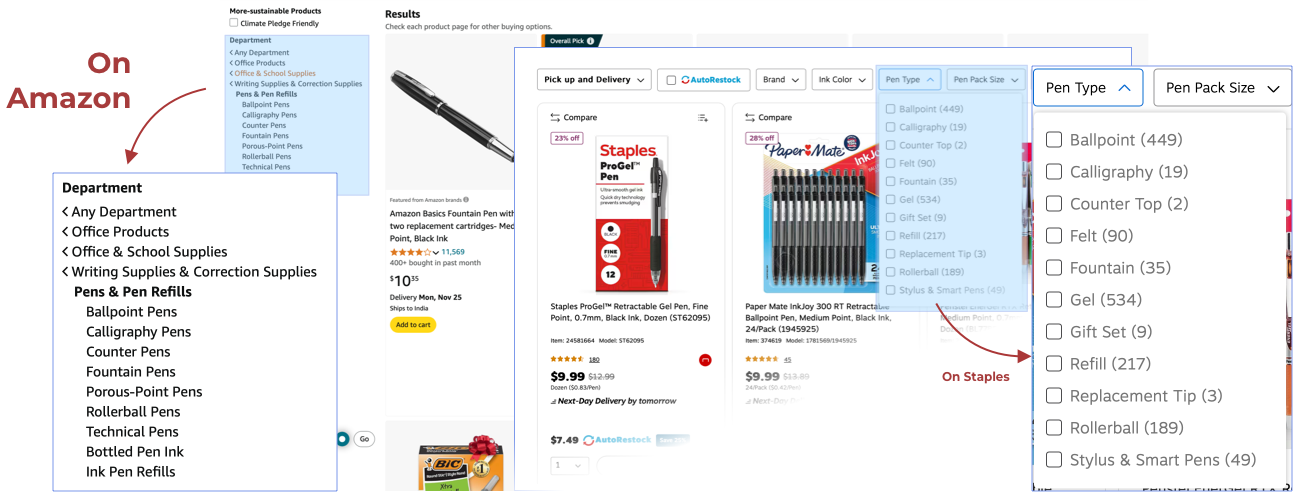

Or look at the nomenclature of categories for “Pens” on Amazon (left side of the image) and Staples (right side of the image) in the image below.

Assortment Depth Analysis

Another big challenge in eCommerce is the lack of standardization in retailer taxonomy. This inconsistency makes it difficult to compare the depth of product assortments across different platforms effectively. For instance, to categorize smartphones,

- Retailer A might organize it under “Electronics > Mobile Phones > Smartphones”

- Retailer B could use “Technology > Phones & Accessories > Cell Phones”

- Retailer C might opt for “Consumer Electronics > Smartphones & Tablets”

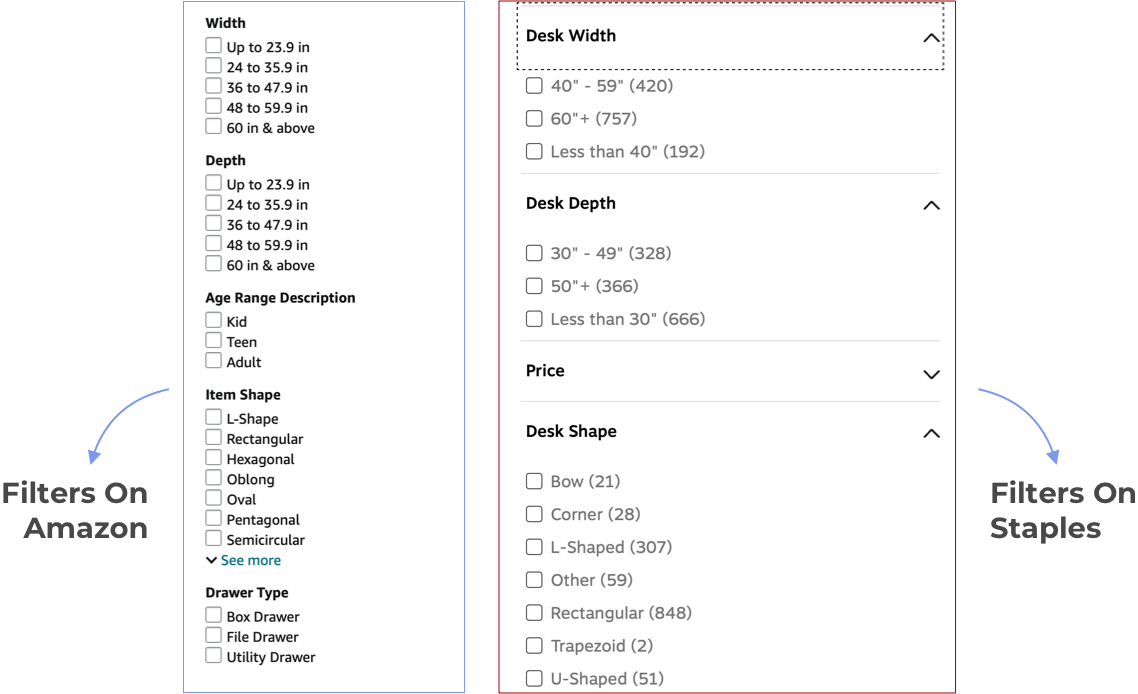

Inconsistent nomenclature and grouping create a significant hurdle for businesses trying to gain a competitive edge through assortment analysis. The challenge is exacerbated if you want to do an in-depth assortment depth analysis for one or more product attributes. For instance, look at the image below to get an idea of the several attribute variations for “Desks” on Amazon and Staples.

Custom categorization through attribute tagging is essential for conducting granular assortment comparisons, allowing companies to accurately assess their product offerings against those of competitors.

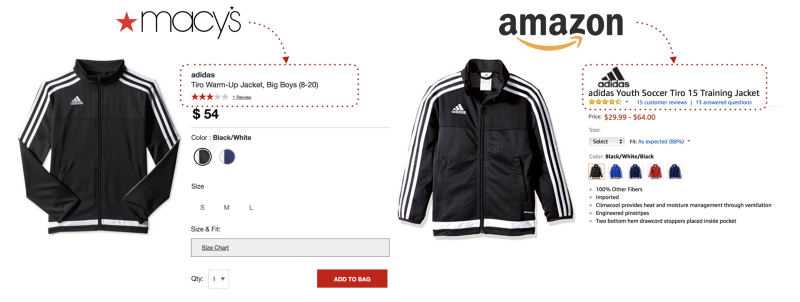

Enhancing Product Matching Capabilities

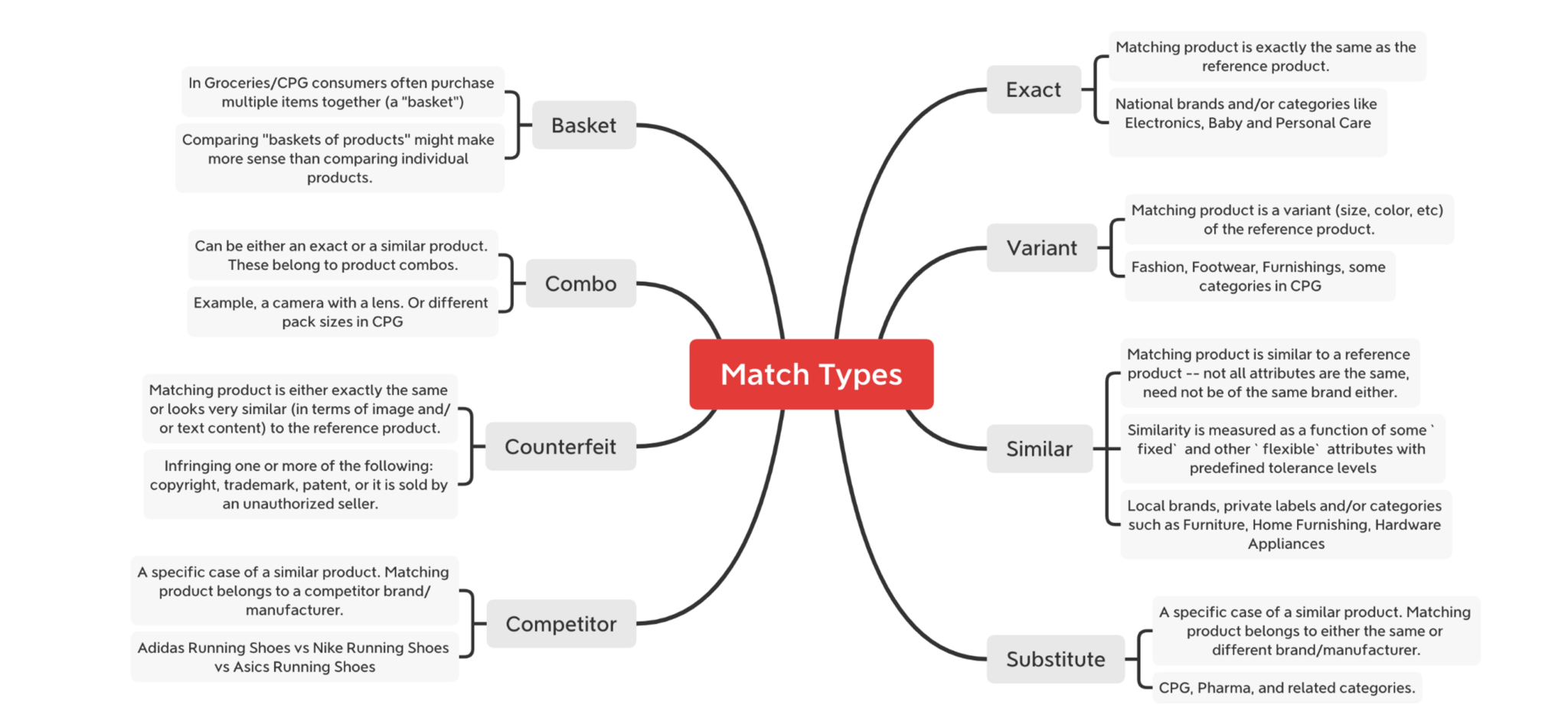

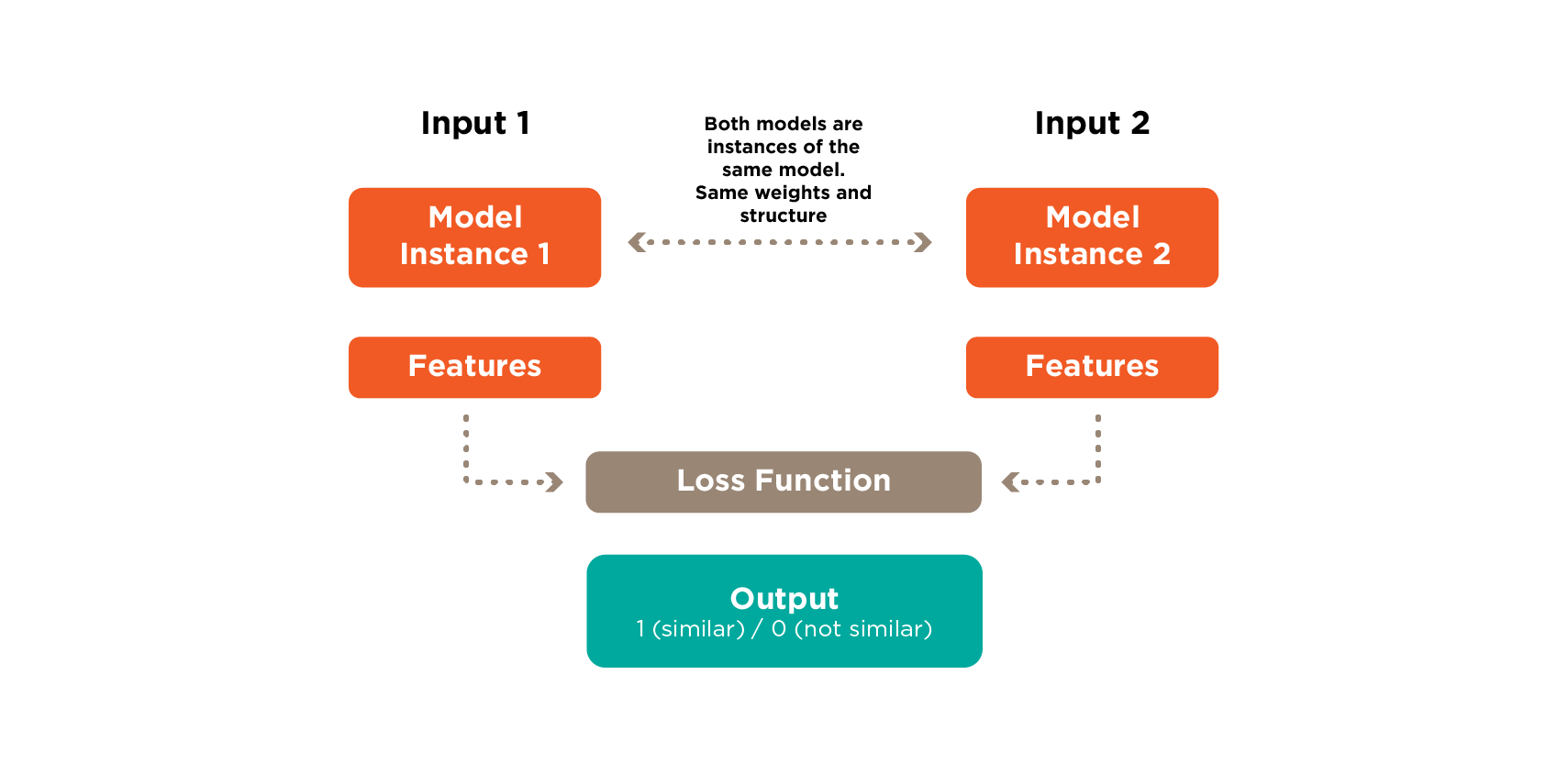

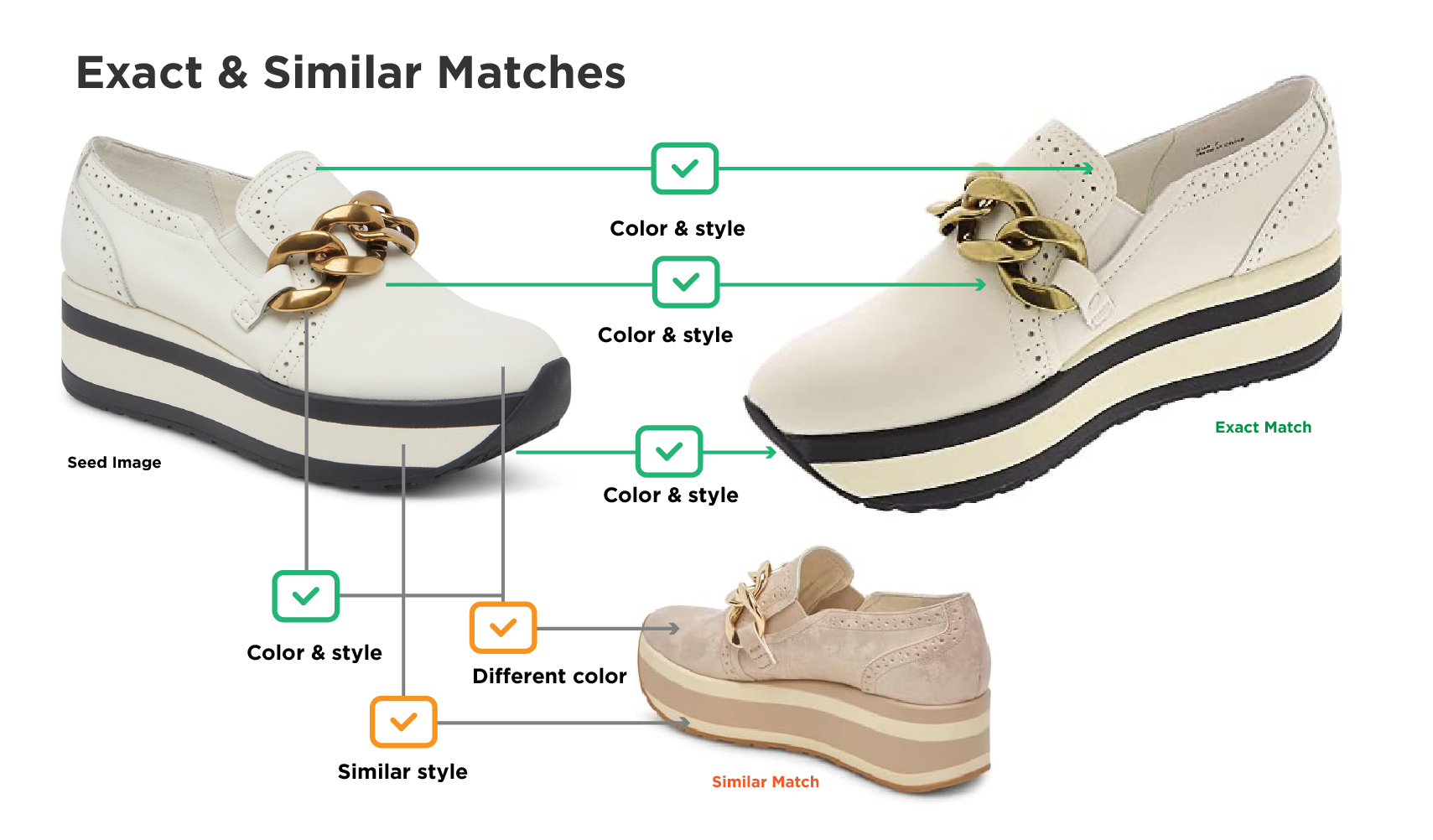

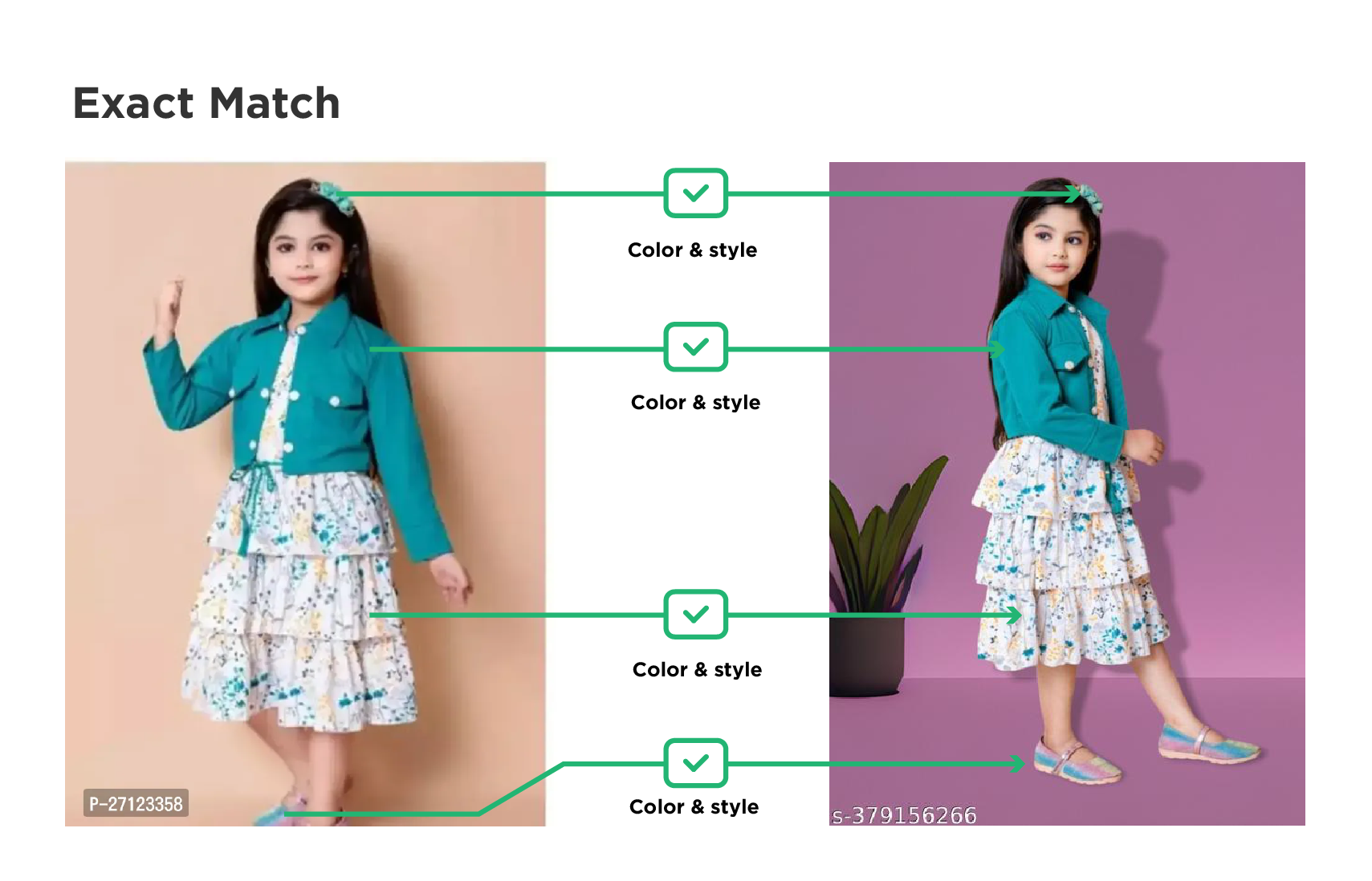

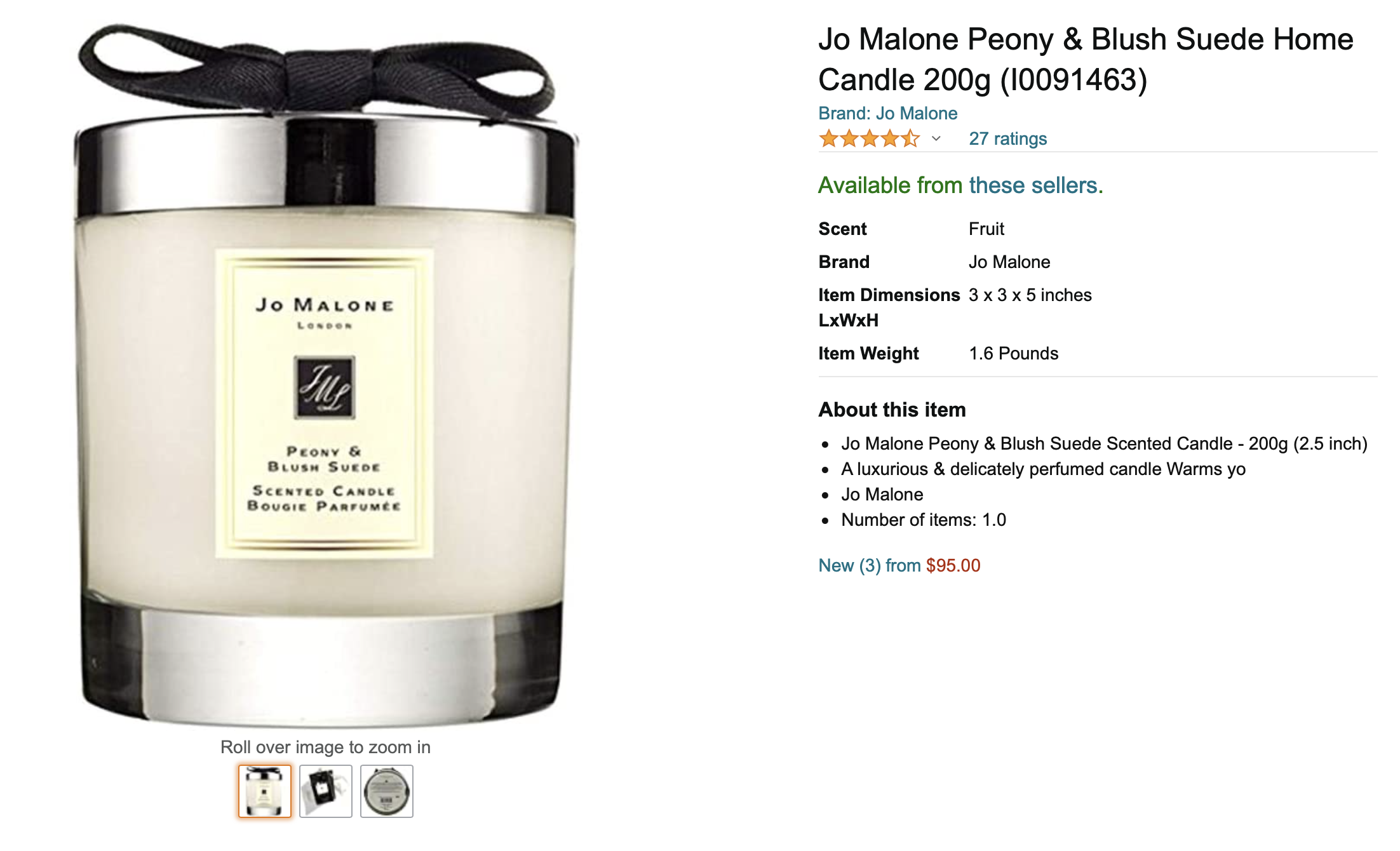

Accurate product matching across different websites is fundamental for competitive pricing intelligence, especially when matching similar and substitute products. Attribute tagging and extraction play a crucial role in this process by narrowing down potential matches more effectively, enabling matching for both exact and similar products, and tagging attributes such as brand, model, color, size, and technical specifications.

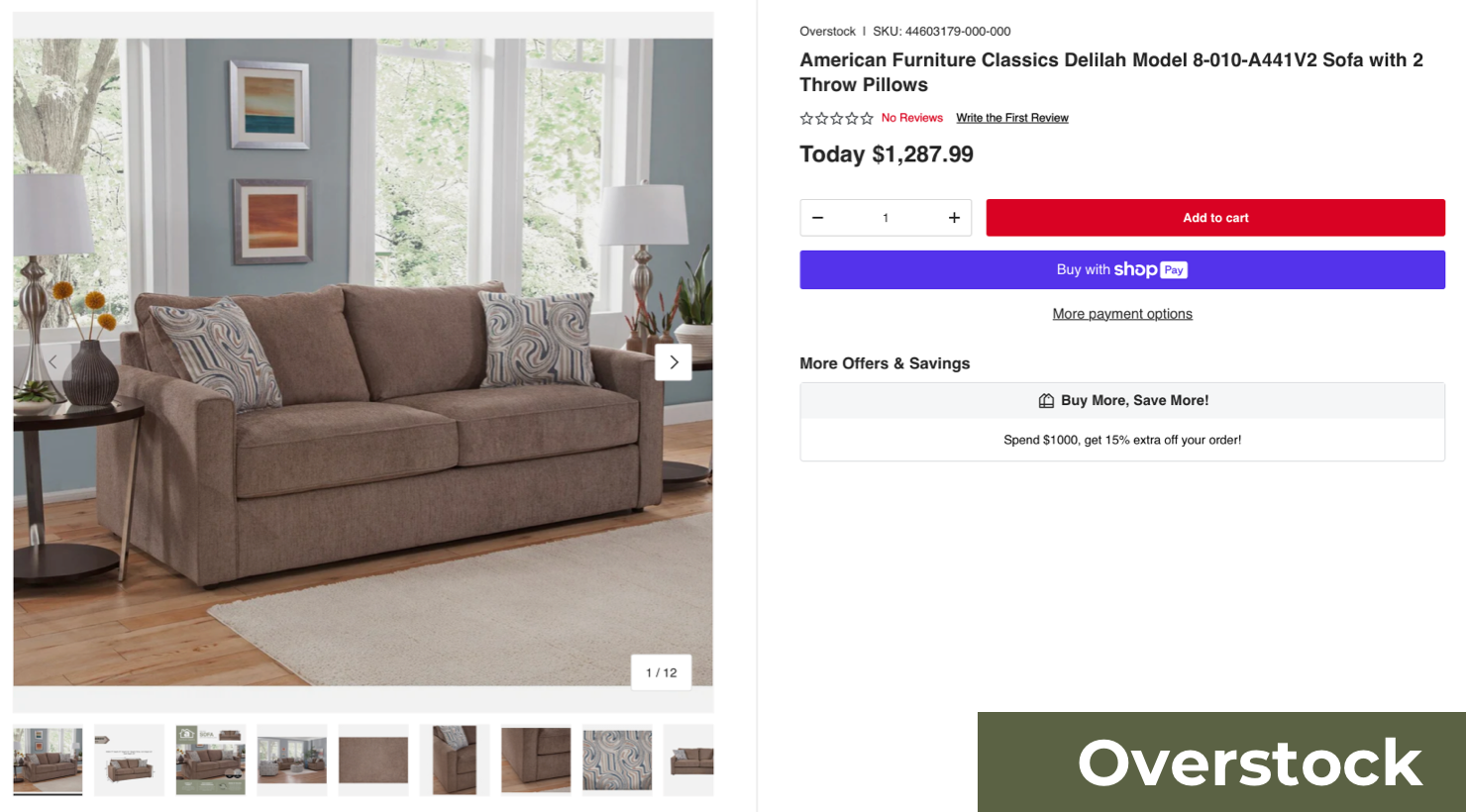

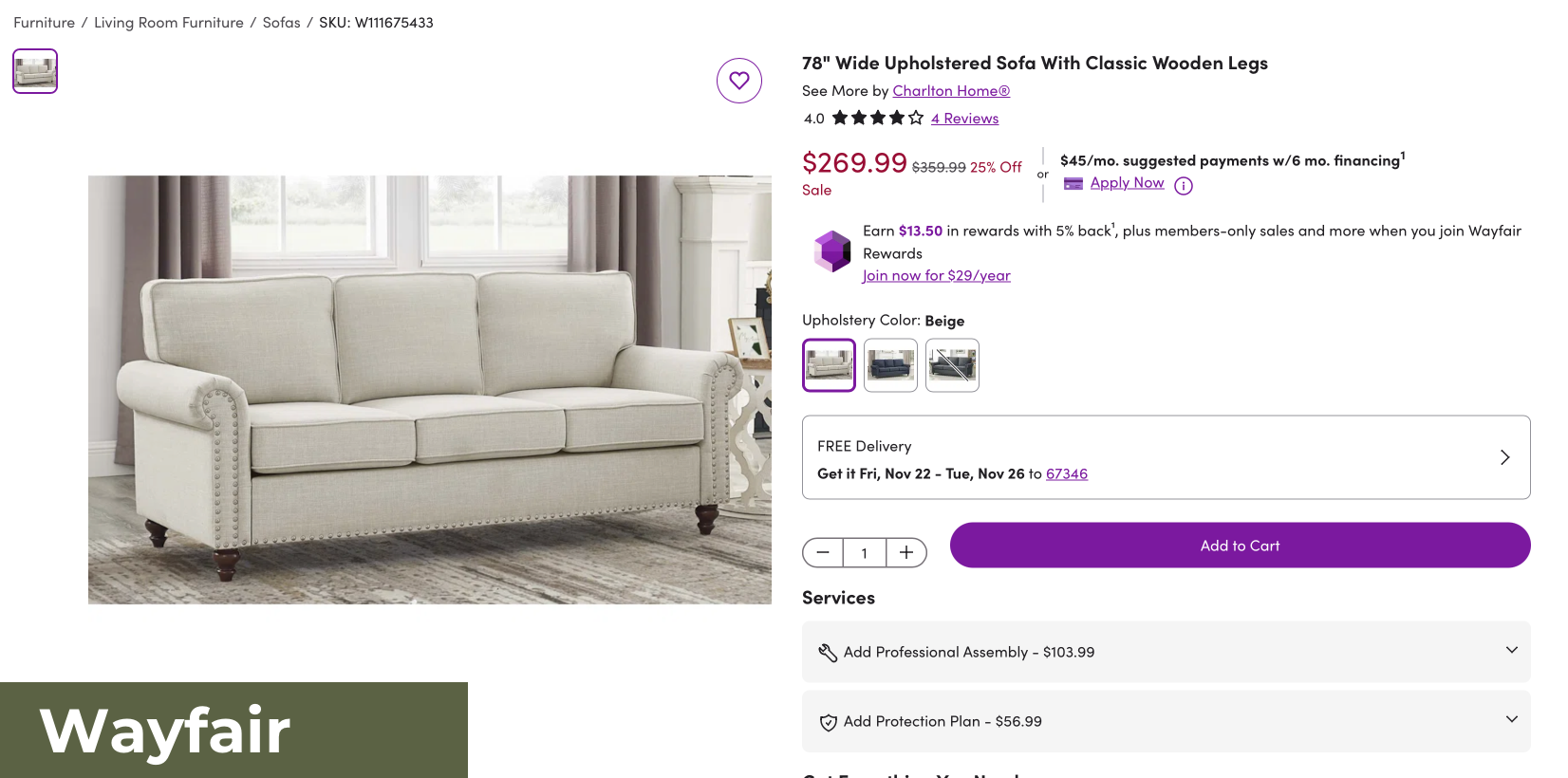

For instance, when choosing to match similar products in the Sofa category for 2-3 seater sofas from Wayfair and Overstock, tagging attributes like brand, color, size, and more is a must for accurate comparisons.

Taking a granular approach not only improves pricing strategies but also helps identify gaps in product offerings and opportunities for expansion.

Fix Content Gaps and improve Product Detail Page (PDP) Content

Attribute tagging plays a vital role in enhancing PDP content by ensuring adherence to brand integrity standards and content compliance guidelines across retail platforms. Tagging attributes allows for benchmarking against competitor content, identifying catalog gaps, and enriching listings with precise details.

This strategic tagging process can highlight missing or incomplete information, enabling targeted optimizations or even complete rewrites of PDP content to improve discoverability and drive conversions. With accurate attribute tagging, businesses can ensure each product page is fully optimized to capture consumer attention and meet retail standards.

Elevating the Search Experience

In today’s online retail marketplace, a superior search experience can be the difference between a sale and a lost customer. Through in-depth attribute tagging, vendors can enable more accurate filtering to improve search result relevance and facilitate easier product discovery for consumers.

By integrating rich product attributes extracted by AI into an in-house search platform, retailers can empower customers with refined and user-friendly search functionality. Enhanced search capabilities not only boost customer satisfaction but also increase the likelihood of conversions by helping shoppers find exactly what they’re looking for more quickly and with minimal effort.

Pitfalls of Conventional Product Tagging Methods

Traditional methods of attribute tagging, such as manual and rule-based systems, have been significantly enhanced by the advent of machine learning. While these approaches may have sufficed in the past, they are increasingly proving inadequate in the face of today’s dynamic and expansive online marketplaces.

Scalability

As eCommerce catalogs expand to include thousands or even millions of products, the limitations of machine learning and rule-based tagging become glaringly apparent. As new product categories emerge, these systems struggle to keep pace, often requiring extensive revisions to existing tagging structures.

Inconsistencies and Errors

Not only is reliance on an entirely human-driven tagging process expensive, but it also introduces a significant margin for error. While machine learning can automate the tagging process, it’s not without its limitations. Errors can occur, particularly when dealing with large and diverse product catalogs.

As inventories grow more complex to handle diverse product ranges, the likelihood of conflicting or erroneous rules increases. These inconsistencies can result in poor search functionality, inaccurate product matching, and ultimately, a frustrating experience for customers, drawing away the benefits of tagging in the first place.

Speed

When product information changes or new attributes need to be added, manually updating tags across a large catalog is a time-consuming process. Slow tagging processes make it difficult for businesses to quickly adapt to emerging market trends causing significant delays in listing new products, potentially missing crucial market opportunities.

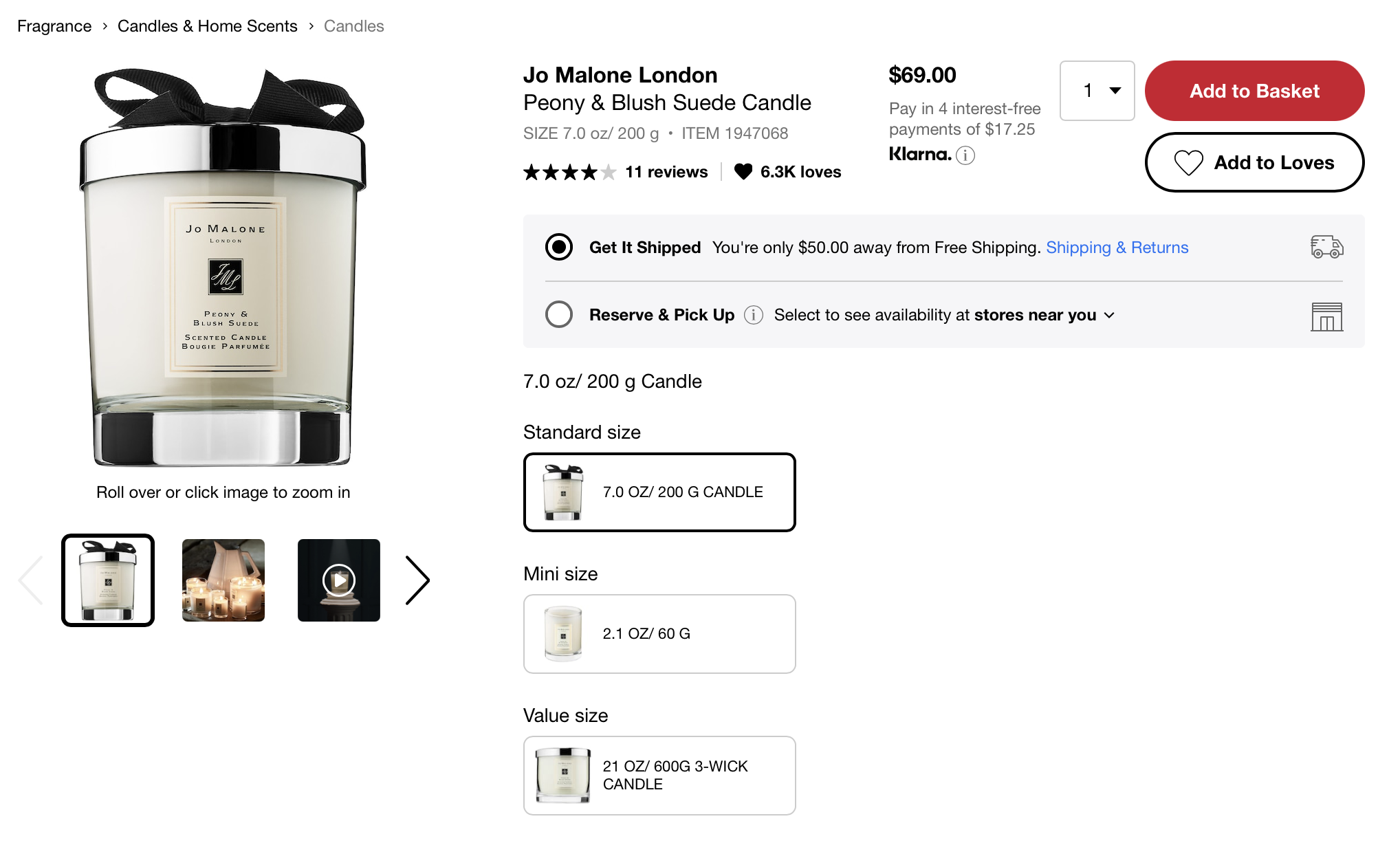

How DataWeave’s Advanced AI Capabilities Revolutionize Product Tagging

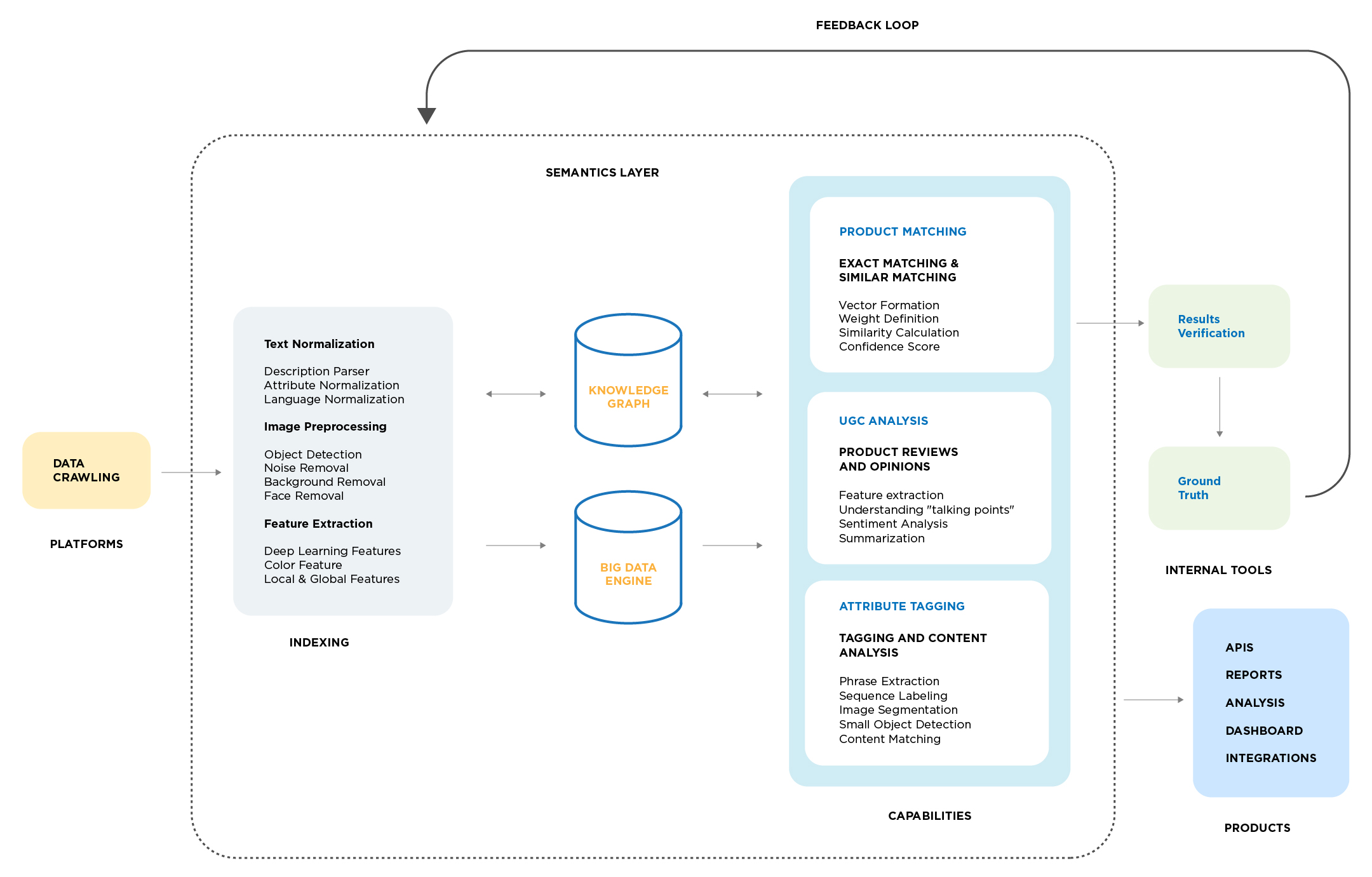

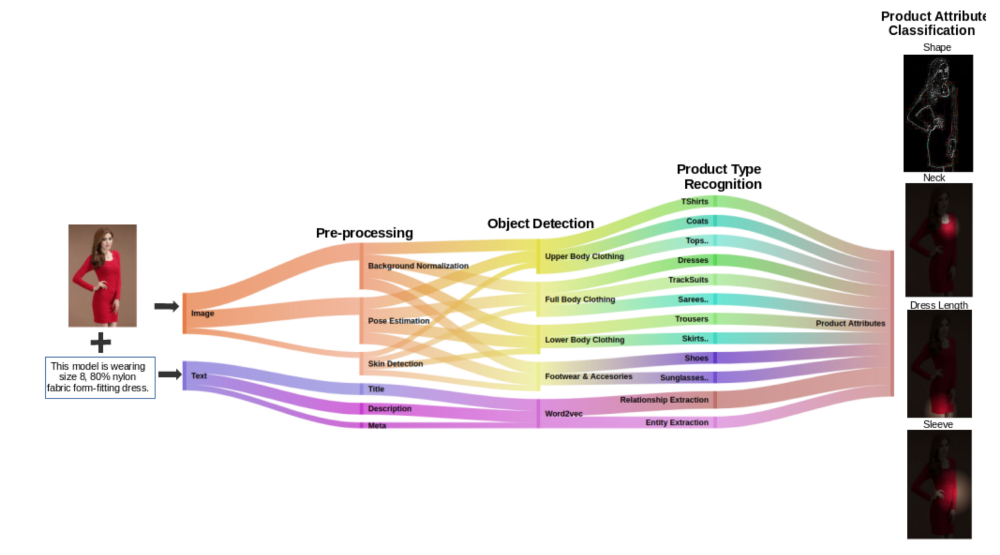

Advanced solutions leveraging RLMs and Generative AI offer promising alternatives capable of overcoming these challenges and unlocking new levels of efficiency and accuracy in product tagging.

DataWeave automates product tagging to address many of the pitfalls of other conventional methods. We offer a powerful suite of capabilities that empower businesses to take their product tagging to new heights of accuracy and scalability with our unparalleled expertise.

Our sophisticated AI system brings an advanced level of intelligence to the tagging process.

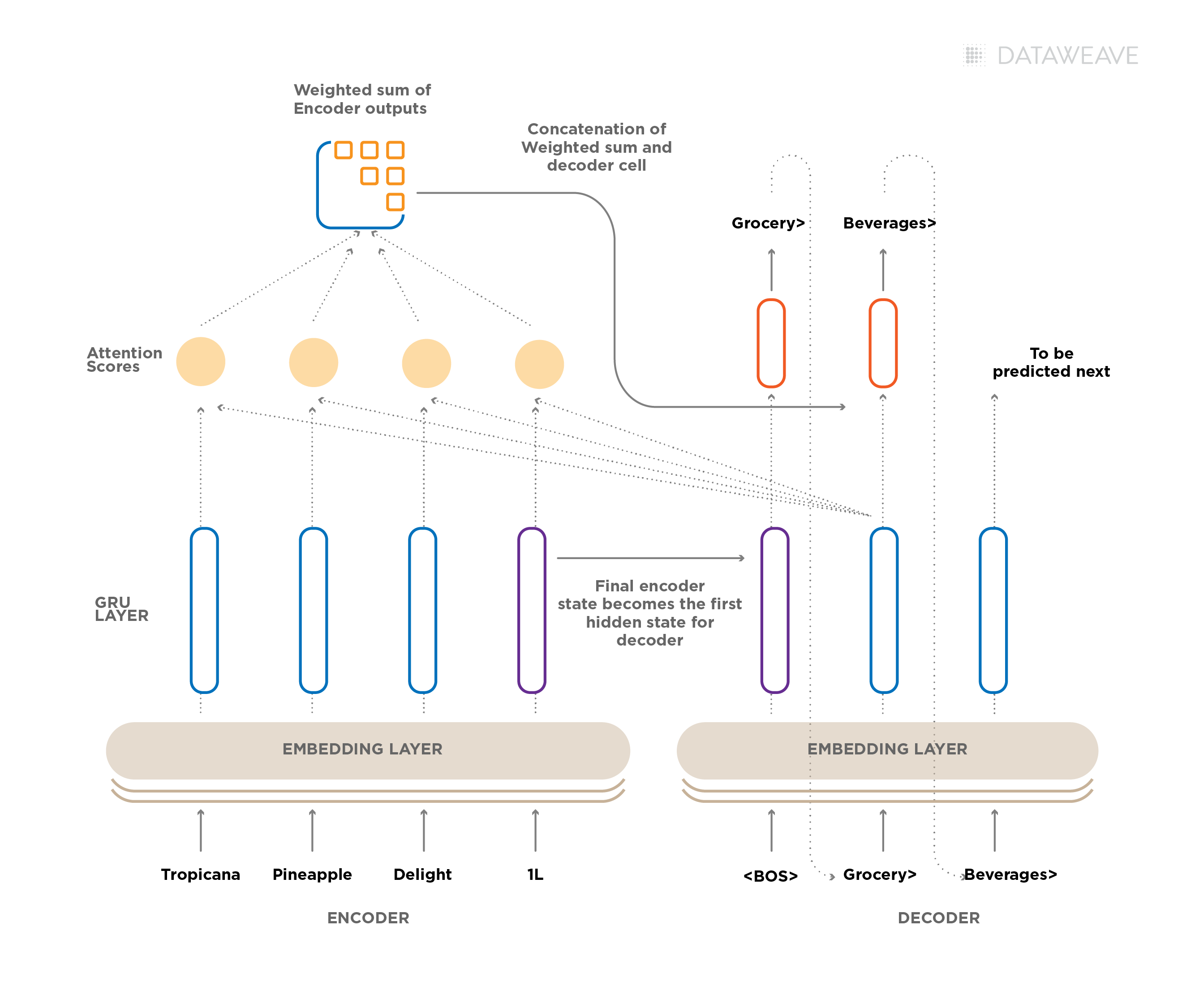

RLMs for Enhanced Semantic Understanding

Semantic Understanding of Product Descriptions

RLMs analyze the meaning and context of product descriptions rather than relying on keyword matching.

Example: “Smartphone with a 6.5-inch display” and “Phone with a 6.5-inch screen” are semantically similar, though phrased differently.

Attribute Extraction

RLMs can identify important product attributes (e.g., brand, size, color, model) even from noisy or unstructured data.

Example: Extracting “Apple” as a brand, “128GB” as storage, and “Pink” as the color from a mixed description.

Identifying Implicit Relationships

RLMs find implicit relationships between products that traditional rule-based systems miss.

Example: Recognizing that “iPhone 12 Pro” and “Apple iPhone 12” are part of the same product family.

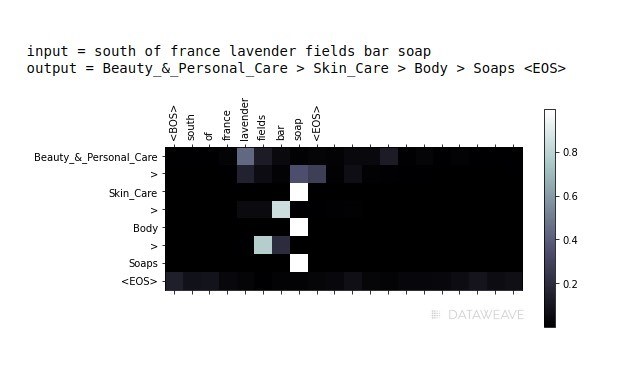

Synonym Recognition in Product Descriptions

Synonym Matching with Context

RLMs identify when different words or phrases describe the same product.

Examples: “Sneakers” = “Running Shoes”, “Memory” = “RAM” (in electronics)

Even subtle differences in wording, like “rose gold” vs “pink” are interpreted correctly.

Overcoming Brand-Specific Terminology

Some brands use their own terminologies (e.g., “Retina Display” for Apple).

RLMs can map proprietary terms to more generic ones (e.g., Retina Display = High-Resolution Display).

Dealing with Ambiguities

RLMs analyze surrounding text to resolve ambiguities in product descriptions.

Example: Resolving “charger” to mean a “phone charger” when matched with mobile phones.

Contextual Understanding for Improved Accuracy and Precision

By leveraging advanced natural language processing (NLP), DataWeave’s AI can process and understand the context of lengthy product descriptions and customer reviews, minimizing errors that often arise at human touch points. The solution processes and interprets information to extract key information to dramatically improve the overall accuracy of product tags.

It excels at grasping the subtle differences between similar products, sizes, colors and identifying and tagging minute differences between items, ensuring that each product is uniquely and accurately represented in a retailer’s catalog.

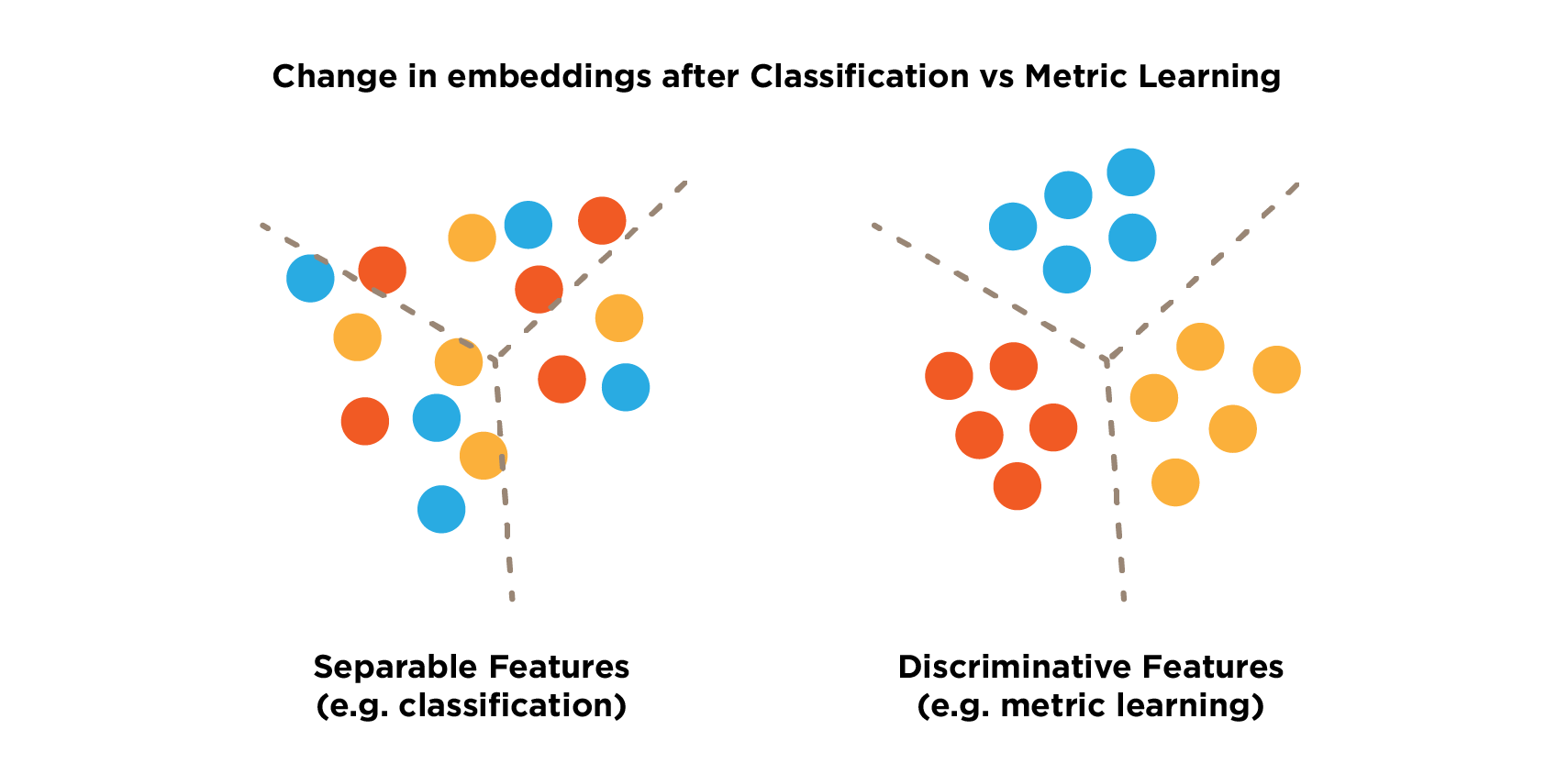

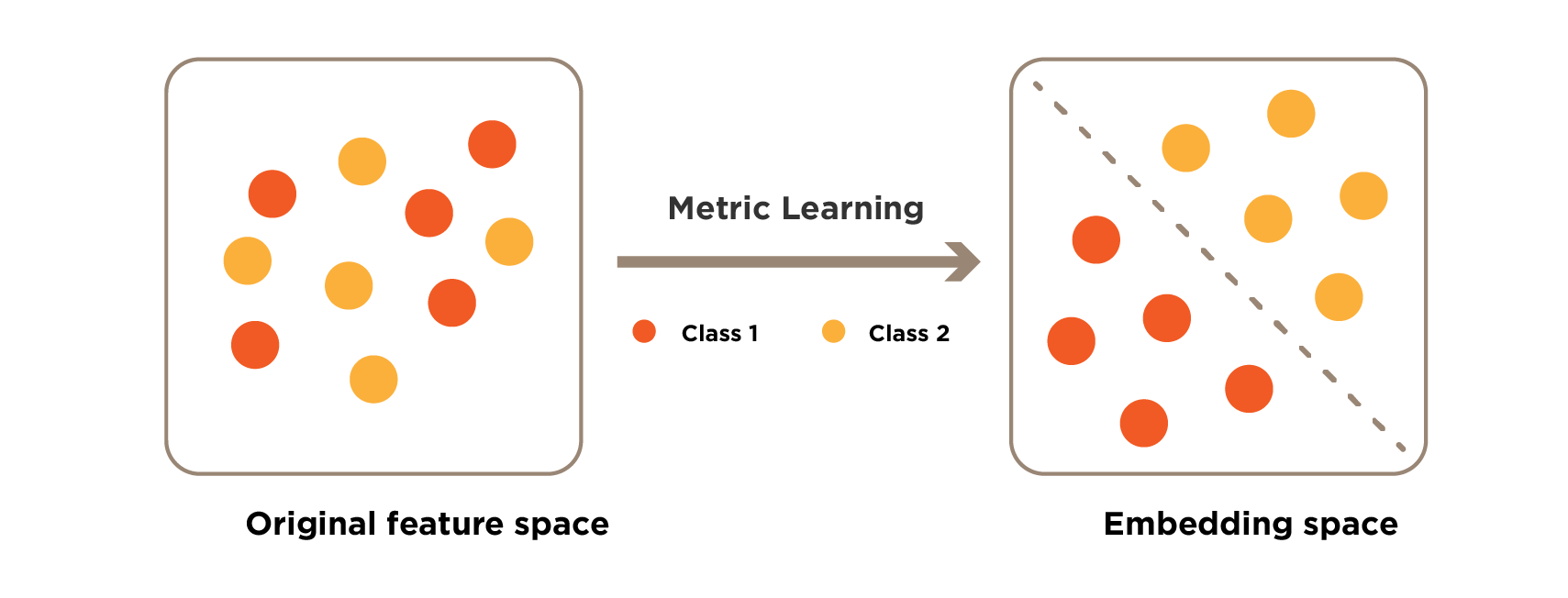

This has a major impact on product and similarity-based matching that can even help optimize similar and substitute product matching to enhance consumer search. At the same time, our AI can understand that the same term might have different meanings in various product categories, adapting its tagging approach based on the specific context of each item.

This deep comprehension ensures that even nuanced product attributes are accurately captured and tagged for easy discoverability by consumers.

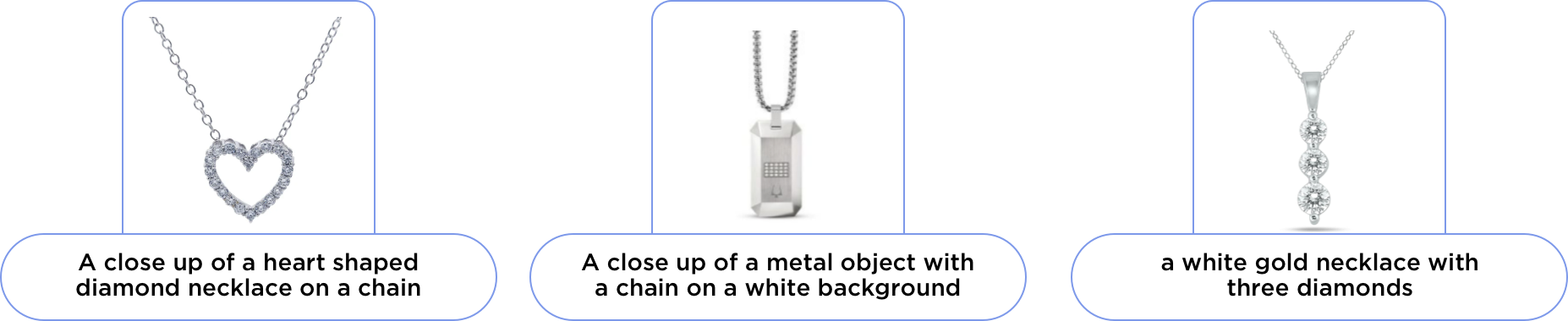

Case Study: Niche Jewelry Attributes

DataWeave’s advanced AI can assist in labeling the subtle attributes of jewelry by analyzing product images and generating prompts to describe the image. In this example, our AI identifies the unique shapes and materials of each item in the prompts.

The RLM can then extract key attributes from the prompt to generate tags. This assists in accurate product matching for searches as well as enhanced product recommendations based on similarities.

This multi-model approach provides the flexibility to adapt as product catalogs expand while remaining consistent with tagging to yield more robust results for consumers.

Unparalleled Scalability

DataWeave can rapidly scale tagging for new categories. The solution is built to handle the demands of even the largest eCommerce catalogs enabling:

- Effortless management of extensive product catalogs: We can process and tag millions of products without compromising on speed or accuracy, allowing businesses to scale without limitations.

- Automated bulk tagging: New product lines or entire categories can be tagged automatically, significantly reducing the time and resources required for catalog expansion.

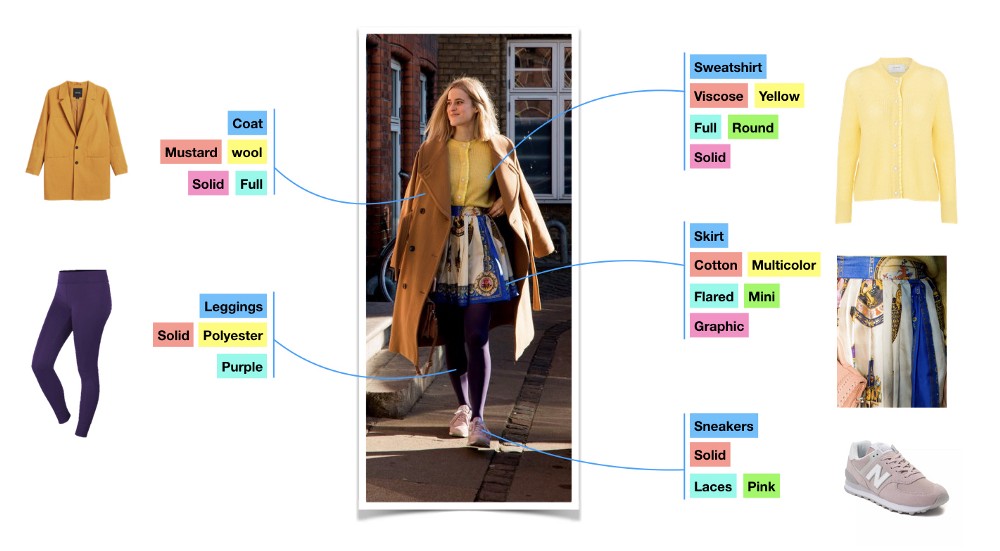

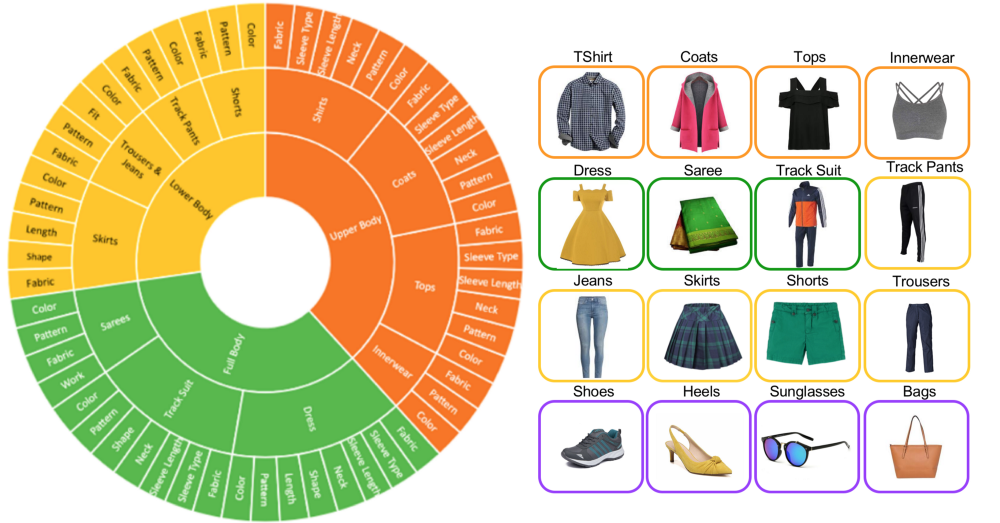

Normalizing Size and Color in Fashion

Style, color, and size are the core attributes in the fashion and apparel categories. Style attributes, which include design, appearance, and overall aesthetics, can be highly specific to individual product categories.

Our product matching engine can easily handle color and sizing complexity via our AI-driven approach combined with human verification. By leveraging advanced technology to identify and normalize identical and similar products from competitors, you can optimize your pricing strategy and product assortment to remain competitive. Using Generative AI in normalizing color and size in fashion is key to powering competitive pricing intelligence at DataWeave.

Continuous Adaptation and Learning

Our solution evolves with your business, improving continuously through feedback and customization for retailers’ specific product categories. The system can be fine-tuned to understand and apply specialized tagging for niche or industry-specific product categories. This ensures that tags remain relevant and accurate across diverse catalogs and as trends emerge.

The AI in our platform also continuously learns from user interactions and feedback, refining its tagging algorithms to improve accuracy over time.

Stay Ahead of the Competition With Accurate Attribute Tagging

In the current landscape, the ability to accurately and consistently tag product attributes is no longer a luxury—it’s essential for staying competitive. With advancements in Generative AI, companies like DataWeave are revolutionizing the way product tagging is handled, ensuring that every item in a retailer’s catalog is presented with precision and depth. As shoppers demand a more intuitive, seamless experience, next-generation tagging solutions are empowering businesses to meet these expectations head-on.

DataWeave’s innovative approach to attribute tagging is more than just a technical improvement; it’s a strategic advantage in an increasingly competitive market. By leveraging AI to scale and automate tagging processes, online retailers can keep pace with expansive product assortments, manage content more effectively, and adapt swiftly to changes in consumer behavior. In doing so, they can maintain a competitive edge.

To learn more, talk to us today!